We propose Neural-DynamicReconstruction (NDR), a template-free method to recover high-fidelity geometry and motions of a dynamic scene from a monocular RGB-D camera. In NDR, we adopt the neural implicit function for surface representation and rendering such that the captured color and depth can be fully utilized to jointly optimize the surface and deformations. To represent and constrain the non-rigid deformations, we propose a novel neural invertible deforming network such that the cycle consistency between arbitrary two frames is automatically satisfied. Considering that the surface topology of dynamic scene might change over time, we employ a topology-aware strategy to construct the topology-variant correspondence for the fused frames. NDR also further refines the camera poses in a global optimization manner. Experiments on public datasets and our collected dataset demonstrate that NDR outperforms existing monocular dynamic reconstruction methods.

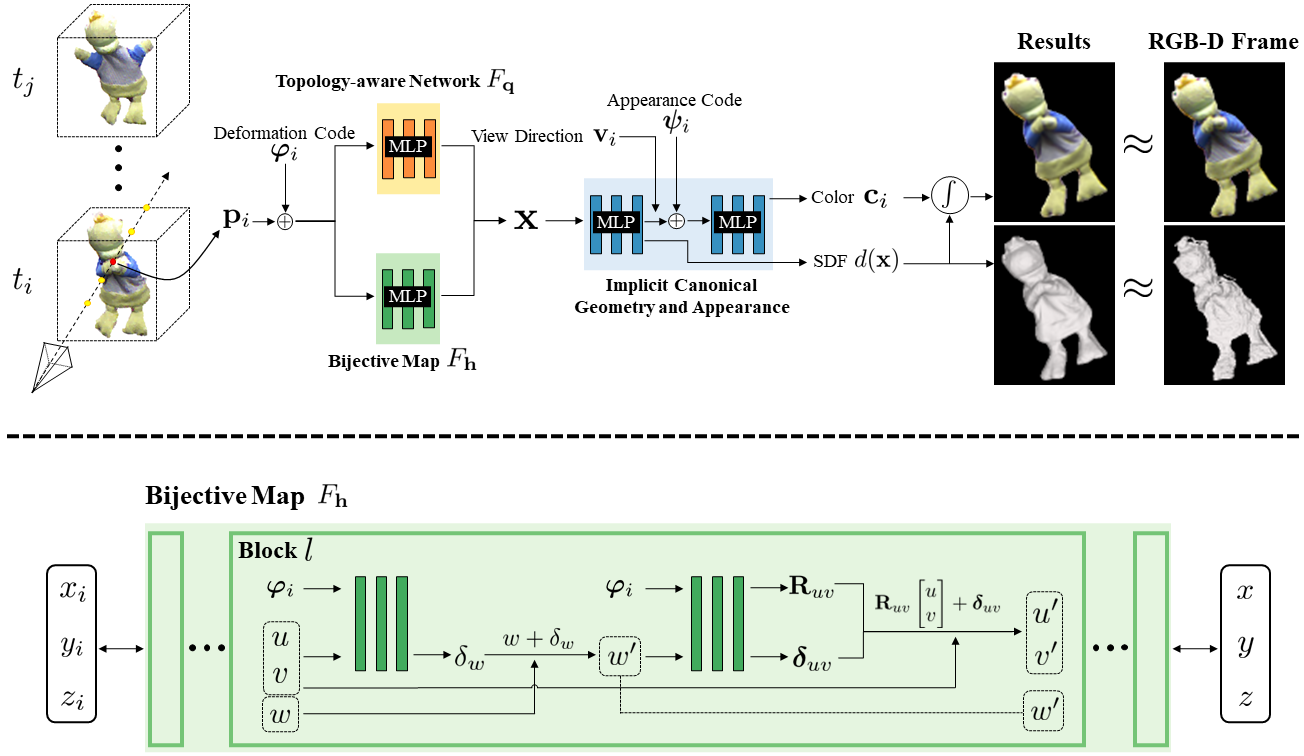

We adopt the neural SDF and radiance field to respectively represent the high-fidelity geometry and appearance in the canonical space (shown in top). In our framework, each RGB-D frame can be integrated into the canonical representation. We propose a novel neural deformation representation (shown in bottom) that implies a continuous bijective map between observation and canonical space. The designed invertible module applies a cycle consistency constraint through the whole RGB-D video; meanwhile, it fits the natural properties of non-rigid motion well.

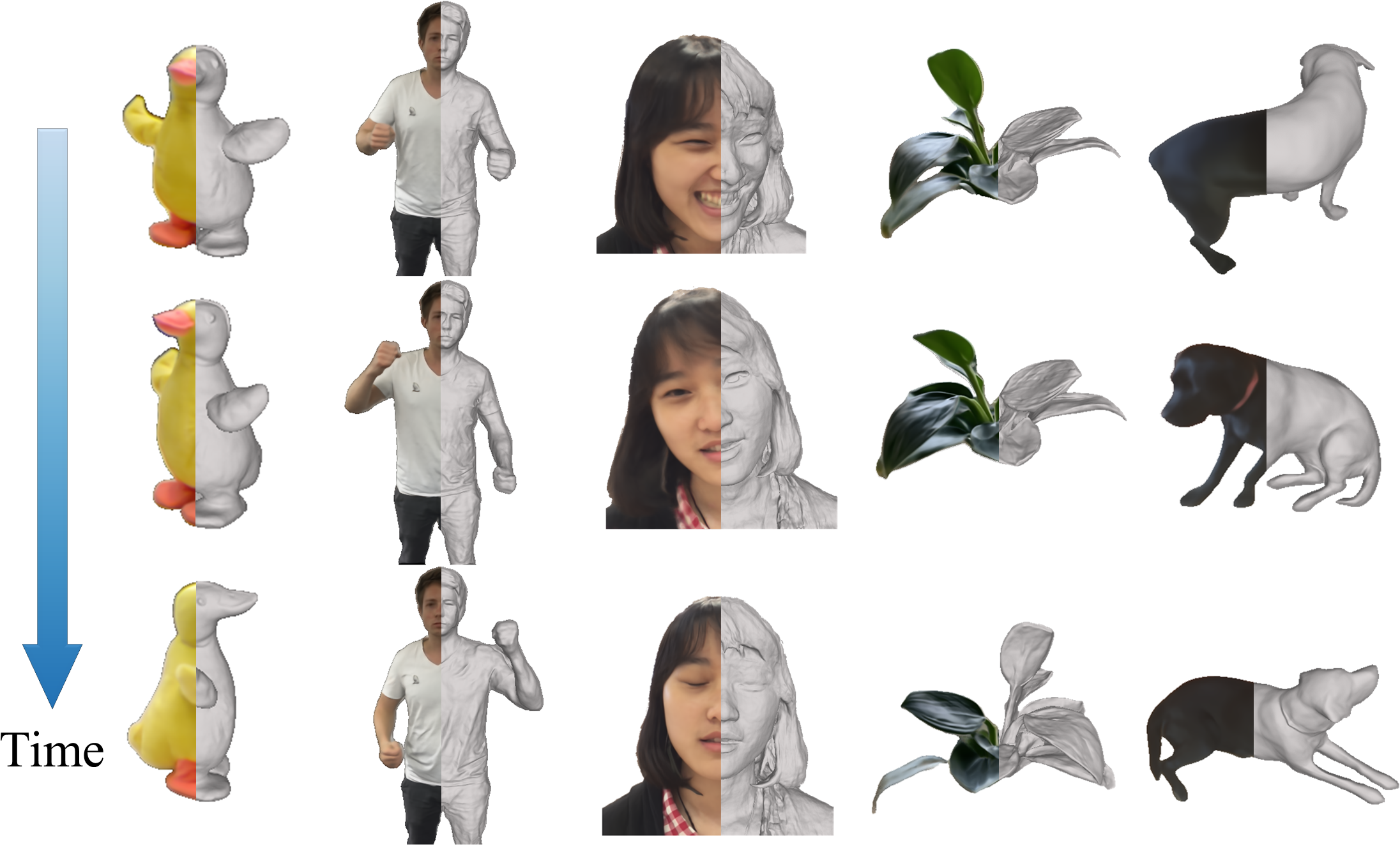

We test our NDR on multiple datasets (KillingFusion dataset [Slavcheva et al. 2017], DeepDeform dataset [Bozic et al. 2020] and our collected dataset) which covers various object classes and challenging cases.

We compare our NDR with RGB-D based methods and recent RGB based method.

We also evaluate several key components of our NDR regarding their effects on the final reconstruction result.

If you find NDR useful for your work please cite:

@inproceedings{Cai2022NDR,

author = {Hongrui Cai and Wanquan Feng and Xuetao Feng and Yan Wang and Juyong Zhang},

title = {Neural Surface Reconstruction of Dynamic Scenes with Monocular RGB-D Camera},

booktitle = {Thirty-sixth Conference on Neural Information Processing Systems (NeurIPS)},

year = {2022}

}