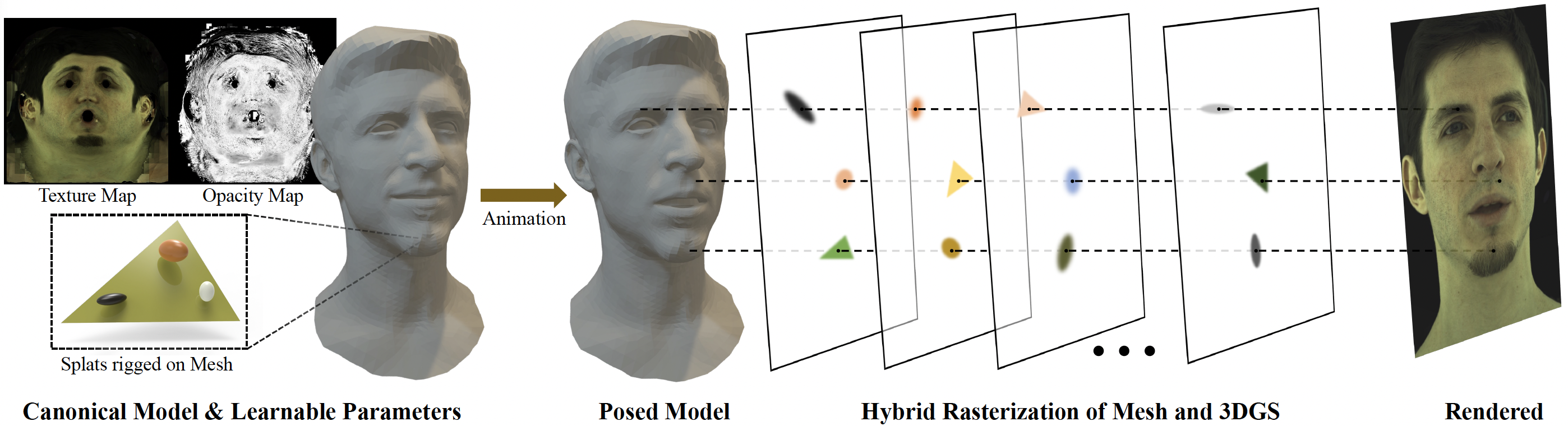

We introduce a novel approach to creating ultra-realistic head avatars and rendering them in real time (≥ 30 fps at 2048 × 1334 resolution). First, we propose a hybrid explicit representation that combines the advantages of two primitive based efficient rendering techniques. UV-mapped 3D mesh is utilized to capture sharp and rich textures on smooth surfaces, while 3D Gaussian Splatting is employed to represent complex geometric structures. In the pipeline of modeling an avatar, after tracking parametric models based on captured multi-view RGB videos, our goal is to simultaneously optimize the texture and opacity map of mesh, as well as a set of 3D Gaussian splats localized and rigged onto the mesh facets. Specifically, we perform α-blending on the color and opacity values based on the merged and re-ordered z-buffer from the rasterization results of mesh and 3DGS. This process involves the mesh and 3DGS adaptively fitting the captured visual information to outline a high-fidelity digital avatar. To avoid artifacts caused by Gaussian splats crossing the mesh facets, we design a stable hybrid depth sorting strategy. Experiments illustrate that our modeled results exceed those of state-of-the-art approaches.

The overall pipeline of proposed HERA. In the canonical space, there is a mesh with a texture UV map (visualized in RGB format) and an opacity UV map, along with several Gaussian splats defined in the local coordinate system of the mesh facets. During animation, the positions of the mesh vertices change, causing the rigged splats to move accordingly. Under the camera view, both the mesh and Gaussian splats are rasterized using the proposed hybrid approach, and the image is rendered through α-blending. The entire pipeline is fully differentiable. Guided by the captured image, the texture map and the opacity map are optimized while the rigged Gaussian splats are updated and densified simultaneously.

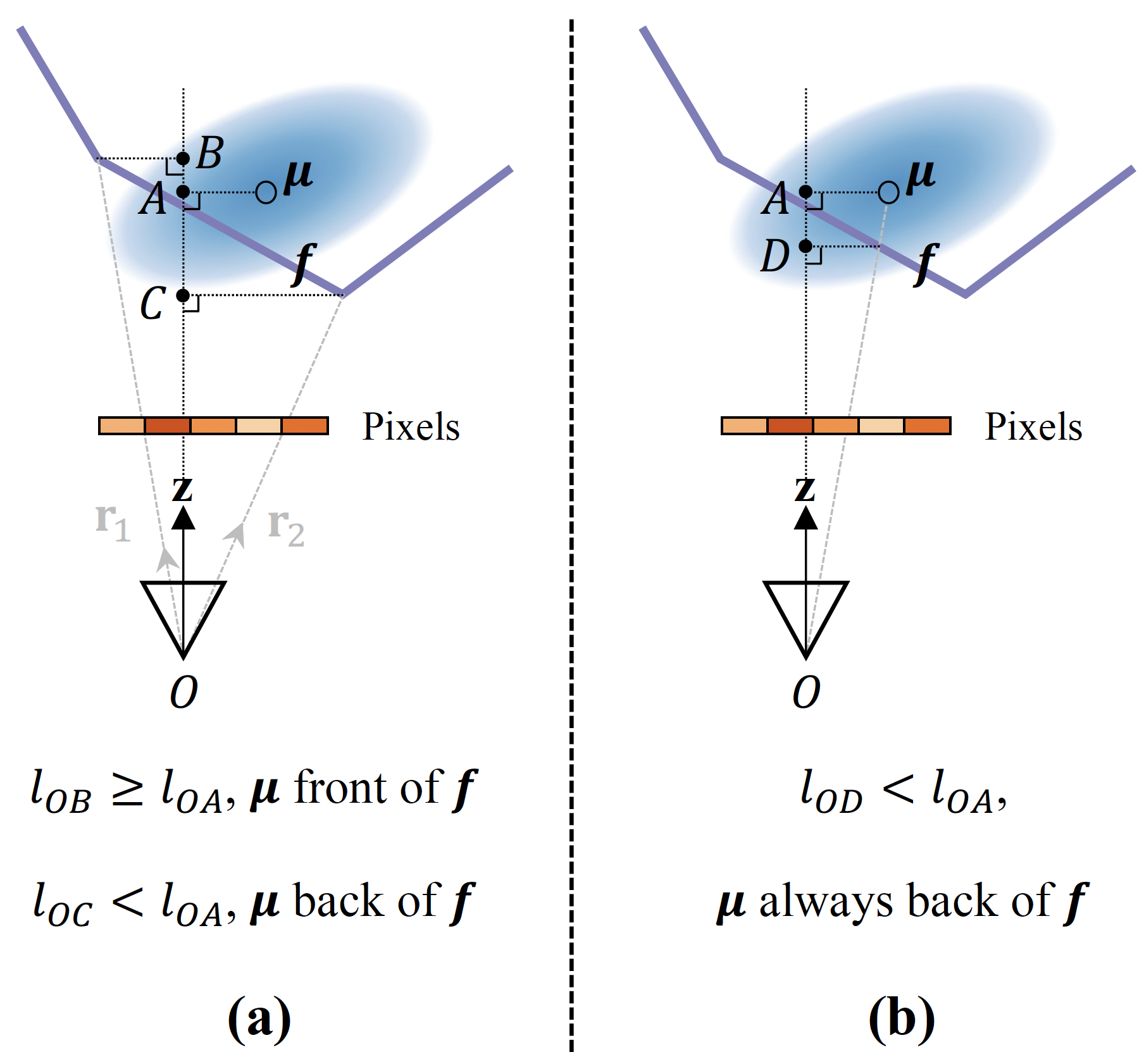

For sorting depths of different geometric primitives, we propose a strategy to prevent Gaussian splats from crossing mesh facets. Instead of (a) sorting the depths of 3DGS (non per-pixel value) and mesh (per-pixel value) directly, (b) we compare the depth of projected point and GS depth to sort stably.

Our HERA could render an avatar at a resolution of 2048 × 1334 with a frame rate of approximately 81 FPS and achieves a PSNR of 34.0 ± 0.5 dB.

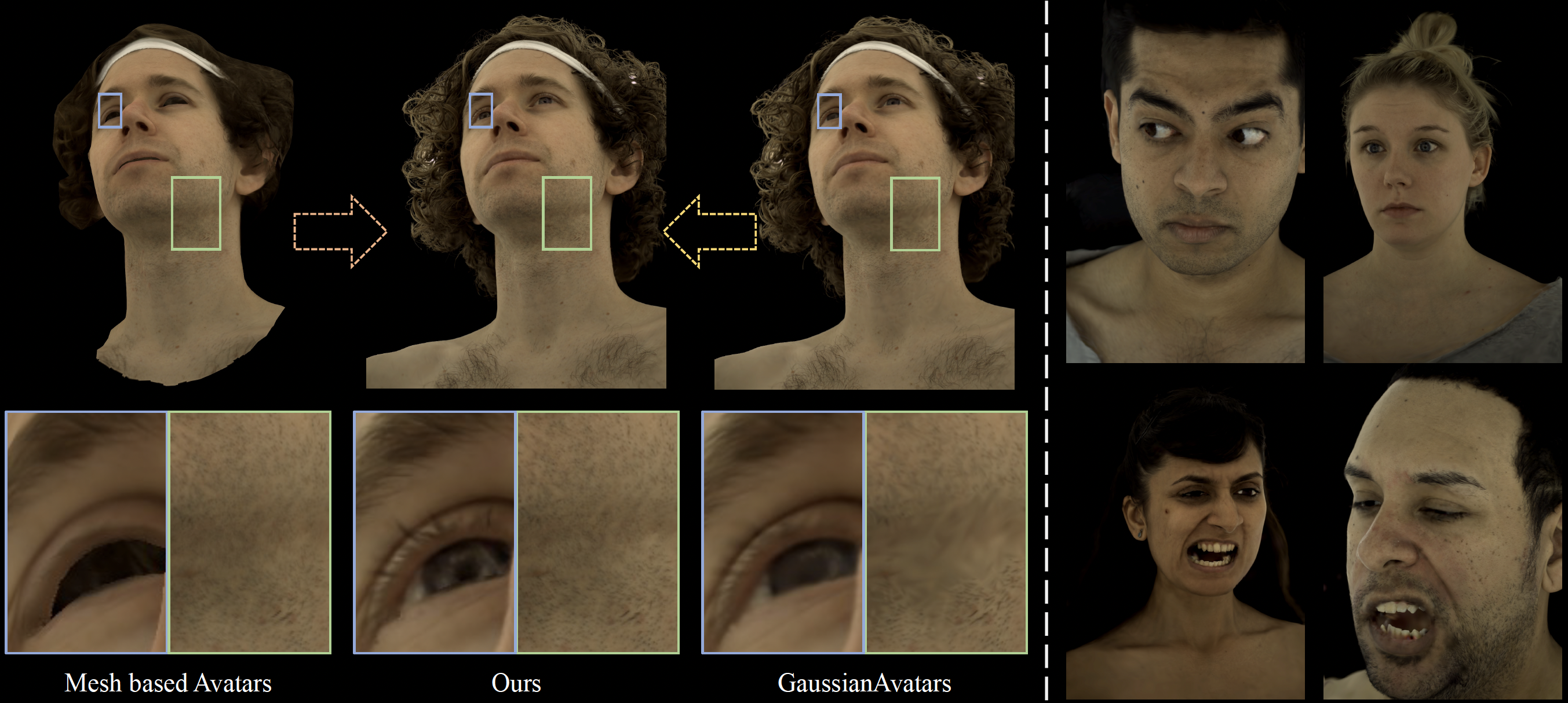

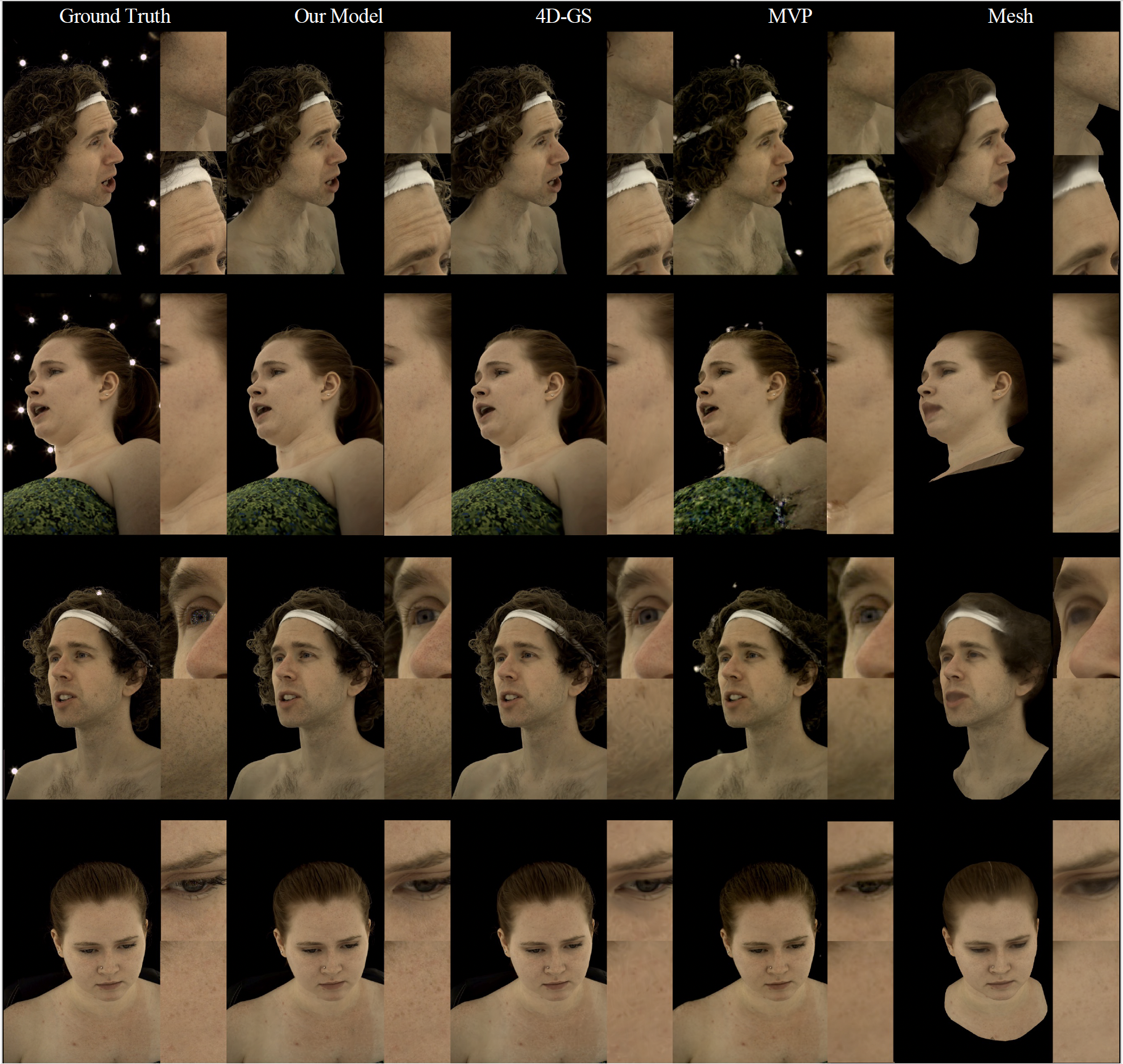

We compare our HERA with state-of-the-art methods of animatable avatars.

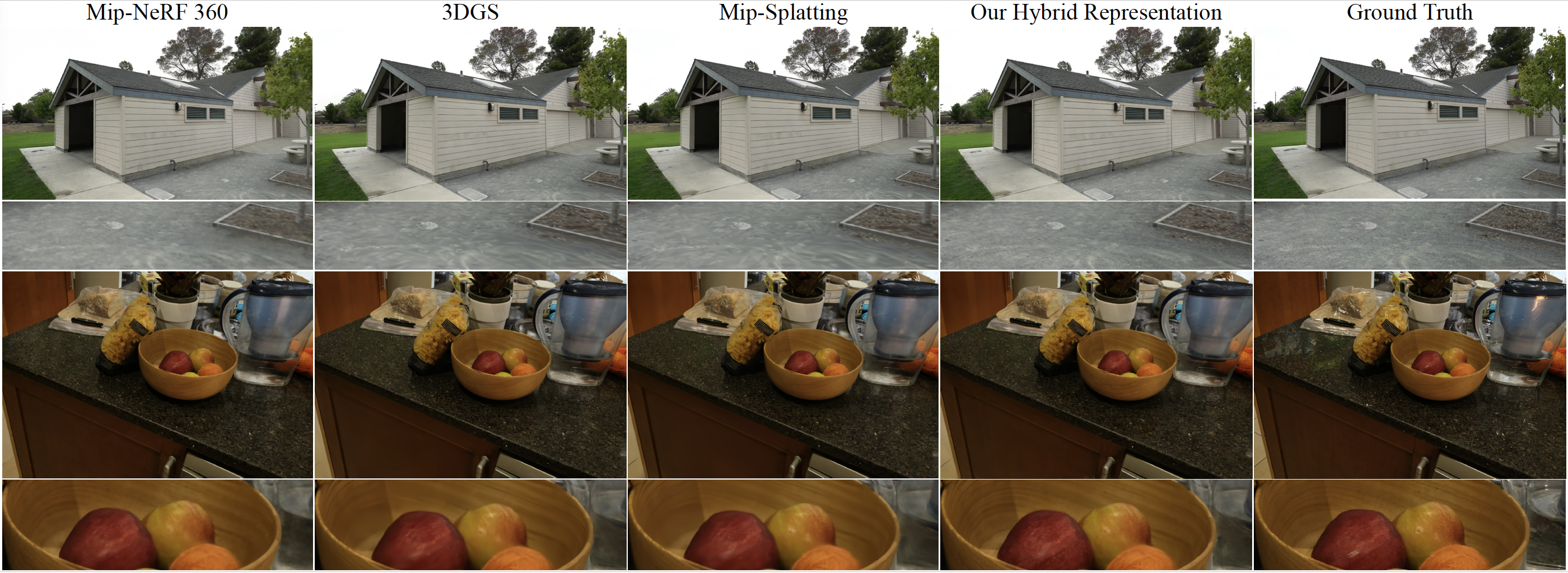

We conduct comparisons of our proposed hybrid representation on novel view synthesis in static scenes and dynamic scenes.

If you find HERA useful for your work please cite:

@inproceedings{Cai2025HERA,

author = {Hongrui Cai, Yuting Xiao, Xuan Wang, Jiafei Li, Yudong Guo, Yanbo Fan, Shenghua Gao, Juyong Zhang},

title = {HERA: Hybrid Explicit Representation for Ultra-Realistic Head Avatars},

booktitle = {Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR)},

year = {2025}

}