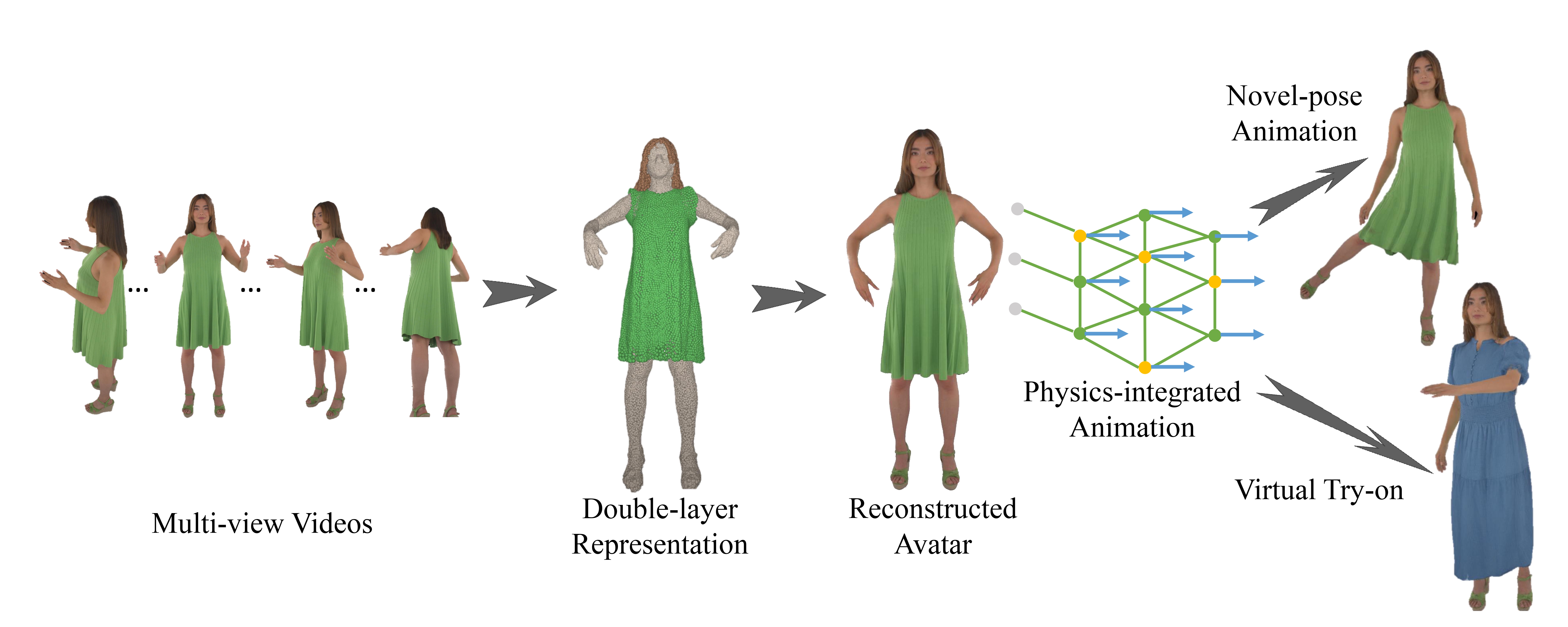

We introduce PICA, a novel representation for high-fidelity animatable clothed human avatars with physics-accurate dynamics, even for loose clothing. Previous neural rendering-based representations of animatable clothed humans typically employ a single model to represent both the clothing and the underlying body. While efficient, these approaches often fail to accurately represent complex garment dynamics, leading to incorrect deformations and noticeable rendering artifacts, especially for sliding or loose garments. Furthermore, previous works represent garment dynamics as pose-dependent deformations and facilitate novel pose animations in a data-driven manner. This often results in outcomes that do not faithfully represent the mechanics of motion and are prone to generating artifacts in out-of-distribution poses. To address these issues, we adopt two individual 3D Gaussian Splatting (3DGS) models with different deformation characteristics, modeling the human body and clothing separately. This distinction allows for better handling of their respective motion characteristics. With this representation, we integrate a GNN-based clothed body physics simulation module to ensure an accurate representation of clothing dynamics.

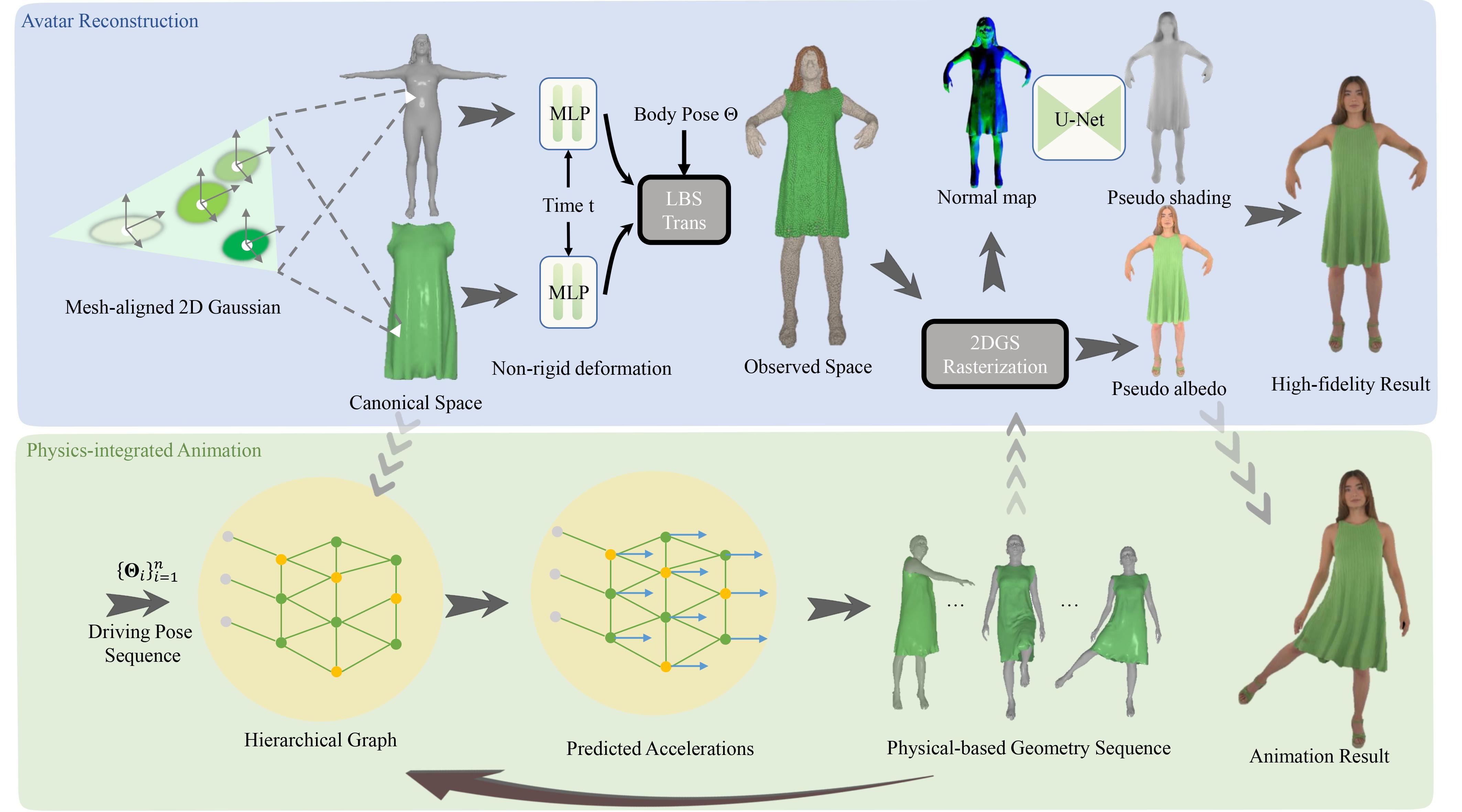

PICA represents clothed human avatars as two separate template meshes and corresponding mesh-aligned Gaussians. The avatar in canonical space is first deformed to observed space by non-rigid deformation and LBS, and then rasterized to the image space of the given camera with a pose-dependent color MLP. After reconstructing the avatar with appearance loss and geometry loss, PICA utilizes a hierarchical graph-based neural dynamics simulator to generate the simulation geometry sequence, which is rendered to the final animation result according to the trained appearance model.

We compare our method with two representative works for novel view synthesis and novel pose animation.

We evaluate the double layer representation, pose-dependent color, some regular loss terms and the number of input views in our algorithm.

We show some novel pose animation results here. The pose sequences are from ActorsHQ dataset and AMASS.

PICA is capable of replacing the clothes and animating the newly dressed avatars.

If you find PICA useful for your work please cite:

@article{peng2024pica,

title={PICA: Physics-Integrated Clothed Avatar},

author={Peng, Bo and Tao, Yunfan and Zhan, Haoyu and Guo, Yudong and Zhang, Juyong},

journal={arXiv preprint arXiv:2407.05324},

year={2024}

}