In this paper, we introduce Neural-ABC, a novel parametric model based on neural implicit functions that can represent clothed human bodies with disentangled latent spaces for identity, clothing, shape, and pose. Traditional mesh-based representations struggle to represent articulated bodies with clothes due to the diversity of human body shapes and clothing styles, as well as the complexity of poses. Our proposed model provides a unified framework for parametric modeling, which can represent the identity, clothing, shape and pose of the clothed human body. Our proposed approach utilizes the power of neural implicit functions as the underlying representation and integrates well-designed structures to meet the necessary requirements. Specifically, we represent the underlying body as a signed distance function and clothing as an unsigned distance function, and they can be uniformly represented as unsigned distance fields. Different types of clothing do not require predefined topological structures or classifications, and can follow changes in the underlying body to fit the body. Additionally, we construct poses using a controllable articulated structure. The model is trained on both open and newly constructed datasets, and our decoupling strategy is carefully designed to ensure optimal performance. Our model excels at disentangling clothing and identity in different shape and poses while preserving the style of the clothing. We demonstrate that Neural-ABC fits new observations of different types of clothing. Compared to other state-of-the-art parametric models, Neural-ABC demonstrates powerful advantages in the reconstruction of clothed human bodies, as evidenced by fitting raw scans, depth maps and images.

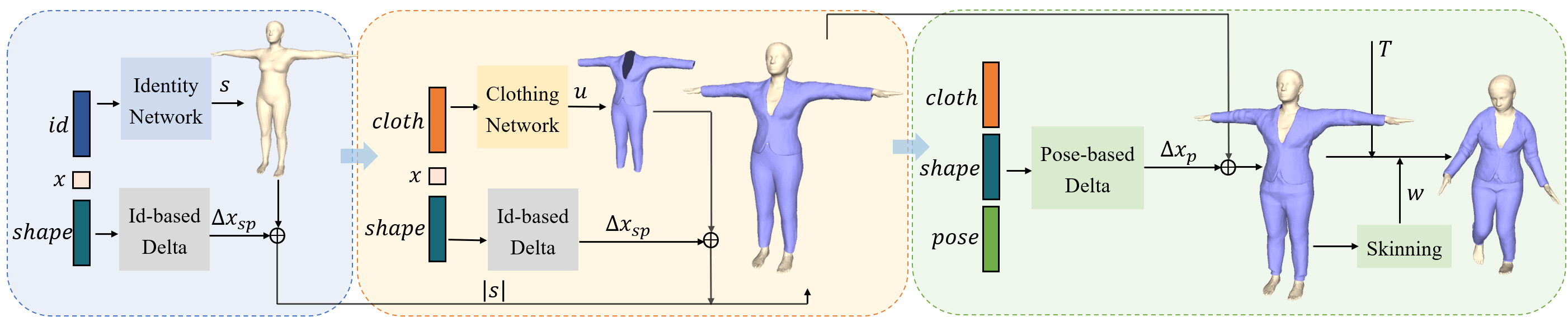

Overview of our Neural-ABC pipeline. Given query points and latent codes, our model can generate the corresponding implicit representation of the human bodies by three modules.

Synthesis process of DressUp. Firstly, we prepare sewing patterns and virtual models. Then align the sewing pattern to the model. Afterwards, wear clothes through physical simulation. Finally, morph the model wearing clothes to the specified poses.

We prepare a large number of sewing patterns and wear them on models to build the dataset.

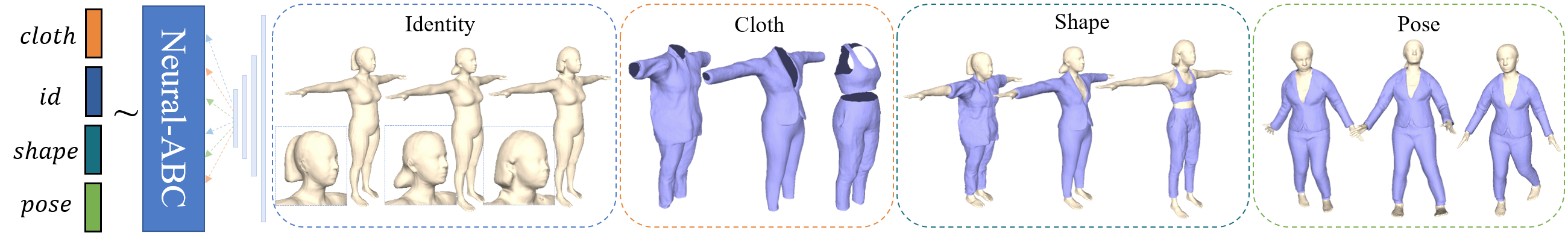

Our method can disentangle generation for identity, clothing, shape and pose.

By modifying the four latent codes of identity, shape, clothing, and pose, corresponding clothing body geometry can be generated. Our four attributes are completely decoupled.s

Our latent spaces of identity, clothing, shape and pose can be interpolated to obtain continuous generation results. This suggests the continuity of our model.

For designated identities, clothing can be changed smoothly, by only modifying the clothing code.

For designated clothed identity, shape can be changed smoothly, by modifying the identity code.

By modifying the identity code, the geometry of the underlying human body can be edited.

We demonstrate the fitting results of Neural-ABC and the editing results after fitting.

We show the results of fitting monocular depth Sequences.

The dressed human body geometry obtained from our model can be enhanced with finer clothing details by incorporating additional deformation modules. For the reconstruction results, we can employ non-rigid registration to obtain finer clothing wrinkles.

The body and clothing fitted using Neural-ABC can be employed with professional animation techniques to generate motion sequences that include intricate wrinkles.

If you find our paper useful for your work please cite:

@article{Chen2024NeuralABC,

author = {Honghu Chen, Yuxin Yao, and Juyong Zhang},

title = {Neural-ABC: Neural Parametric Models for Articulated Body with Clothes},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2024},

publisher={IEEE}}