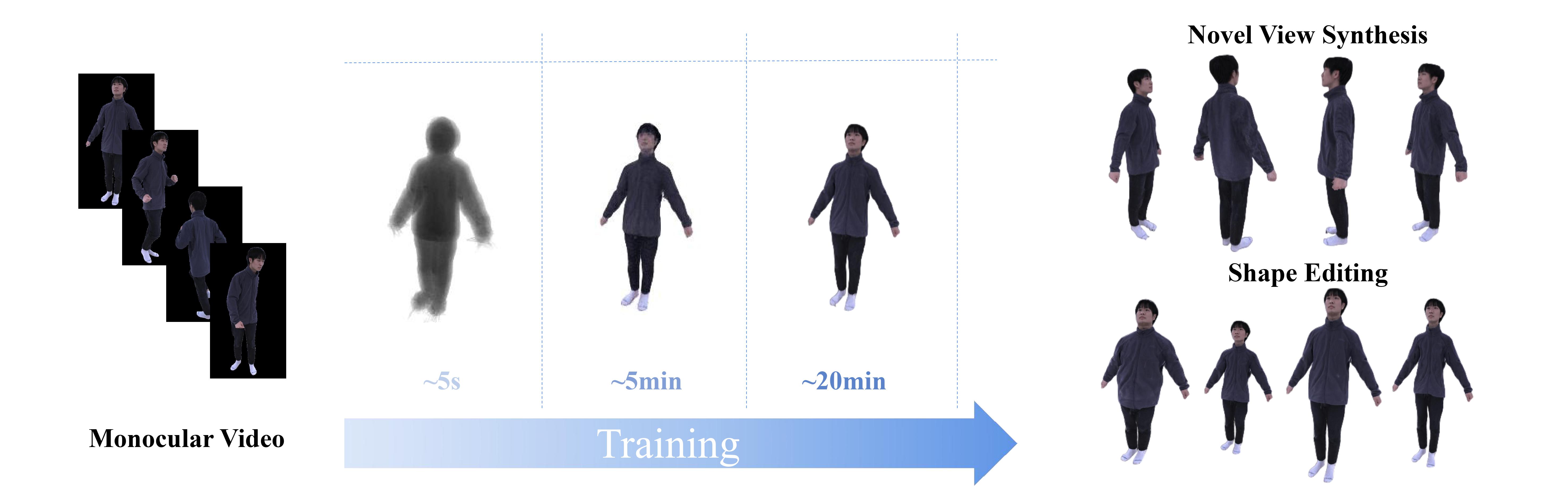

Recently, many works have been proposed to utilize the neural radiance field for novel view synthesis of human performers. However, most of these methods require hours of training, making them difficult for practical use. To address this challenging problem, we propose IntrinsicNGP, which can train from scratch and achieve high-fidelity results in about twenty minutes with videos of a human performer. To achieve this target, we introduce a continuous and optimizable intrinsic coordinate rather than the original explicit Euclidean coordinate in the hash encoding module of instant-NGP. With this novel intrinsic coordinate, IntrinsicNGP can aggregate inter-frame information for dynamic objects with the help of proxy geometry shapes. Moreover, the results trained with the given rough geometry shapes can be further refined with an optimizable offset field based on the intrinsic coordinate. Extensive experimental results on several datasets demonstrate the effectiveness and efficiency of IntrinsicNGP. We also illustrate our approach's ability to edit the shape of reconstructed subjects.

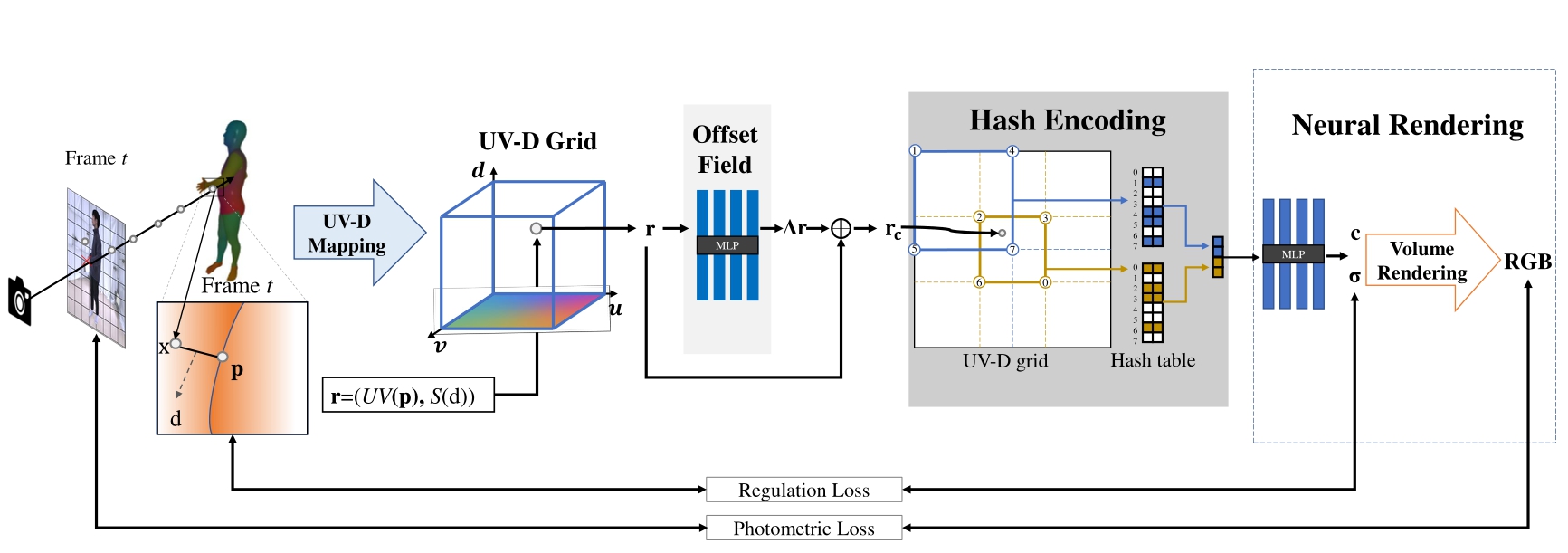

Overview of our method. Given a sample point at any frame, we first obtain an intrinsic coordinate conditioned on human body surface to aggregate the corresponding point information of different frames. An offset field is proposed to optimize the intrinsic coordinate to model detailed non-rigid deformation. Then we exploit multi-resolution hash encoding to get the feature, which is the encoded input to the NeRF MLP to regress color and density.

We test our method on multiple datasets (ZJU-Mocap dataset[Peng et al. 2021], People-Snapshot dataset [Alldieck et al. 2018] and our collected dataset).

We compare our method with state-of-the-art implicite human novel view synthesis methods.

We evaluate the offset field and UV-D representation in our algorithm.

If you find IntrinsicNGP useful for your work please cite:

@article{peng2023intrinsicngp,

title={IntrinsicNGP: Intrinsic Coordinate based Hash Encoding for Human NeRF},

author={Peng, Bo and Hu, Jun and Zhou, Jingtao and Gao, Xuan and Zhang, Juyong},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2023},

publisher={IEEE}

}